Introduction

Proxmox Virtual Environment (Proxmox VE) is an open-source virtualization platform with support for Kernel-based Virtual Machine (KVM) and Linux Containers (LXC). Proxmox provides a web-based management interface, CLI tools, and REST API for management purposes as well as a great documentation including detailed Proxmox VE Administration Guide, manual pages and API viewer.

The tutorial focuses on the network configuration and installation of Proxmox VE on Hetzner servers, since there are some specific guidelines that must be followed.

Step 1 - Installation

There are several ways to install Proxmox VE on a dedicated Hetzner root server. You can either install Debian 13 and upgrade to Proxmox later on or use the official image and install it via qemu.

1.1 Installing Proxmox VE on Debian

- Boot the server into the Rescue System.

- Run installimage, select and install the required Debian 13 (trixie).

- Configure the RAID level, hostname, and partitioning.

- Save the configuration. After the installation completes, reboot the server.

- Once the server becomes reachable again, follow the official guide on how to upgrade from Debian 13 to Proxmox.

1.2 Installation with ZFS

Proxmox can also be installed via qemu using the official ISO. In this method, you start the installer in a virtual machine from the Hetzner Rescue system. The network, however needs to be configured before the server is rebooted since the network interface is virtualized. The drives inside the VM will be shown as /dev/vdX which is normal because they are attached using the virtio interface.

Preparing the Environment

Before starting with the installation process forward a local port and connect to your server with the following command:

ssh -L 5900:localhost:5900 root@your_server_ipCopy the link address of your image and download it in the Rescue system using wget:

wget https://enterprise.proxmox.com/iso/proxmox-ve_9.0-1.isoNow you can start a virtual machine in the Rescue system, pass the drives, and boot from the ISO. Copy the following command:

qemu-system-x86_64 \

-enable-kvm \

-cpu host \

-m 16G \

-boot d \

-cdrom ./proxmox-ve_9.0-1.iso \

-drive file=/dev/nvme0n1,format=raw,if=virtio \

-drive file=/dev/nvme1n1,format=raw,if=virtio \

-bios /usr/share/OVMF/OVMF_CODE.fd \

-vnc 127.0.0.1:0(The -bios /usr/share/OVMF/OVMF_CODE.fd \ line is for EFI servers only. Remove the line if the BIOS is set to legacy. If you are unsure you can use [ -d "/sys/firmware/efi" ] && echo "UEFI" || echo "BIOS" to check if the server is on EFI.)

root@rescue ~ # [ -d "/sys/firmware/efi" ] && echo "UEFI" || echo "BIOS"

UEFI(If you have SATA drives rename the devices to /dev/sda for example instead of /dev/nvme0n1)

Use a VNC client and connect to 127.0.0.1, the installation should appear.

Please note that the VNC client may timeout once you press install, simply reconnect to 127.0.0.1.

Configure the main interface

Once the installation process has finished, stop QEMU (ctrl + c in the terminal window). Before rebooting the server, the network interface name must be adjusted in /etc/network/interfaces. In order to figure out the true interface name, you can use the predict-check command:

root@rescue ~ # predict-check

eth0 -> enp0s31f6So in this example enp0s31f6 would be the true interface name. The interface that is configured in the Rescue system can be viewed with netdata:

root@rescue ~ # netdata

Network data:

eth0 LINK: yes

MAC: aa:bb:cc:dd:cc:ff

IP: 192.168.1.100/24

IPv6: (none)

Intel(R) PRO/1000 Network DriverNow boot up Proxmox using the same mehtod as earlier but without the installation medium and connect to 127.0.0.1. Copy the following command:

qemu-system-x86_64 \

-enable-kvm \

-cpu host \

-m 16G \

-boot d \

-drive file=/dev/nvme0n1,format=raw,if=virtio \

-drive file=/dev/nvme1n1,format=raw,if=virtio \

-bios /usr/share/OVMF/OVMF_CODE.fd \

-vnc 127.0.0.1:0Login and edit the network configuration in /etc/network/interfaces:

nano /etc/network/interfacesAdjust the interface name, save and reboot the server. For example this would be a basic configuration:

root@pve:~# cat /etc/network/interfaces

auto lo

iface lo inet loopback

auto enp0s31f6

iface enp0s31f6 inet static

address your_server_ip/32

gateway your_server_gatewayStep 2 - Network Configuration

Hetzner has a strict IP/MAC binding to ensure security and prevent spoofing. In some scenarios, only a routed setup is possible. For example, additional subnets, single failover IPs and failover subnets are always MAC-bound and statically routed on the main IP address of the server, and can only be configured in a routed setup.

In some cases, the additional subnet (with the exception of the failover subnet) can also be bound to the server's additional IP/MAC address if a virtual MAC address is generated in the Robot Panel. This can be requested directly via a support ticket request.

By default, the main and additional single IP addresses are bound to the server’s main MAC address. For bridged setups, you need to generate a virtual MAC address in the Robot panel.

Enable IP Forwarding on the Host

With a routed setup, the bridges (vmbr0, for example) are not connected with the physical interface. You need to activate IP forwarding on the host system. Please note that packet forwarding between network interfaces is disabled for the default Hetzner installation.

For IPv4 and IPv6:

echo 1 > /proc/sys/net/ipv4/ip_forward

echo 1 > /proc/sys/net/ipv6/conf/all/forwardingApply the changes:

systemctl restart systemd-sysctlCheck if the forwarding is active:

sysctl net.ipv4.ip_forward

sysctl net.ipv6.conf.all.forwardingAdd the network configuration

Choose one of the variants:

- Routed Setup

- Bridged Setup

- vSwitch with a public subnet

» Assign IP addresses to VM's/Containers from a vSwitch with a public subnet. - Hetzner Cloud Network

» Establish a connection between the virtual machines/LXC in Proxmox and the Hetzner cloud network. - Masquerading (NAT)

» Enable VMs with private IPs to have internet access through the host's public IP.

2.1 Routed Setup

In a routed configuration, the host system's bridge IP address serves as the gateway by default, and manual route addition to a virtual machine is required when the extra IP does not belong to the same subnet. That's why we will set the mask to /32, because devices such as vmbr0 inherently route traffic only for IP addresses that fall within the same subnet. By using a /32 mask, each additional IP is treated as its own unique network entity which will ensure that the traffic reaches its correct destination even if the additional IP is not from the same subnet.

-

Host system Routed

We will use the following example addresses to represent a real-use-case scenario:

- Main IP:

198.51.100.10/24 - Gateway of the main IP:

198.51.100.1/24 - Additional Subnet:

203.0.113.0/24 - Additional single IP (foreign subnet):

192.0.2.20/24 - Additional single IP (same subnet):

198.51.100.30/24 - Failover single IP:

172.17.234.100/32 - Failover subnet:

172.17.234.0/24 - IPv6:

2001:DB8::/64

- Main IP:

Please note that you will have to restart the network after applying the network configuration for the Proxmox host to add the static routes. If virtual machines or containers are running during the network restart, you will need to stop (not just restart) and then start them again. This ensures that their point-to-point connections to the host are recreated properly.

systemctl restart networking# /etc/network/interfaces

auto lo

iface lo inet loopback

iface lo inet6 loopback

auto enp0s31f6

iface enp0s31f6 inet static

address 198.51.100.10/32 # Main IP

gateway 198.51.100.1 # Gateway

post-up echo 1 > /proc/sys/net/ipv4/ip_forward

post-up echo 1 > /proc/sys/net/ipv6/conf/all/forwarding

# IPv6 for the main interface

iface enp0s31f6 inet6 static

address 2001:db8::2/128 # /128 on the main interface, /64 on the bridge

gateway fe80::1

# Bridge for single IP's (foreign and same subnet)

auto vmbr0

iface vmbr0 inet static

address 198.51.100.10/32 #Main IP

bridge-ports none

bridge-stp off

bridge-fd 0

post-up ip route add 192.0.2.20/32 dev vmbr0 # Additional IP from a foreign subnet

post-up ip route add 198.51.100.30/32 dev vmbr0 # Additional IP from the same subnet

post-up ip route add 172.17.234.100/32 dev vmbr0 # Failover IP

# IPv6 for the bridge

iface vmbr0 inet6 static

address 2001:db8::3/64 # Should not be the same address as the main Interface

# Additional Subnet 203.0.113.0/24

auto vmbr1

iface vmbr1 inet static

address 203.0.113.1/24 # Set one usable IP from the subnet range

bridge-ports none

bridge-stp off

bridge-fd 0

# Failover Subnet 172.17.234.0/24

auto vmbr2

iface vmbr2 inet static

address 172.17.234.1/24 # Set one usable IP from the subnet range

bridge-ports none

bridge-stp off

bridge-fd 0-

Guest system Routed (Debian 12)

The IP of the bridge in the host system is always used as the gateway, i.e. the main IP for individual IPs and the IP from the subnet configured in the host system for subnets.

Guest configuration:

-

With an additional IP from the same subnet:

# /etc/network/interfaces auto lo iface lo inet loopback auto ens18 iface ens18 inet static address 198.51.100.30/32 # Additional IP gateway 198.51.100.10 # Main IP # IPv6 iface ens18 inet6 static address 2001:DB8::4/64 # IPv6 address of the subnet gateway 2001:DB8::3 # Bridge Address -

With a foreign Additional IP:

# /etc/network/interfaces auto lo iface lo inet loopback auto ens18 iface ens18 inet static address 192.0.2.20/32 # Additional IP from a foreign subnet gateway 198.51.100.10 # Main IP -

With an IP assigned from the additional subnet

# /etc/network/interfaces auto lo iface lo inet loopback auto ens18 iface ens18 inet static address 203.0.113.10/24 # Subnet IP gateway 203.0.113.1 # Gateway is the IP of the bridge (vmbr1) -

With an IP assigned from the failover subnet

# /etc/network/interfaces auto lo iface lo inet loopback auto ens18 iface ens18 inet static address 172.17.234.10/24 # Subnet IP gateway 172.17.234.1 # Gateway is the IP of the bridge (vmbr2) -

Single failover IP

# /etc/network/interfaces auto lo iface lo inet loopback auto ens18 iface ens18 inet static address 172.17.234.100/32 # Failvoer IP gateway 198.51.100.10 # The gateway IP is the main IP

-

2.2 Bridged Setup

When setting up Proxmox in bridged mode, it is absolutely crucial to request virtual MAC addresses for each single additional IP address through the Robot Panel (virtual MAC addresses can only be generated for single additional IP addresses). In this mode, the host acts as a transparent bridge and is not part of the routing path. This means that packets arriving at the router will have the source MAC address of the virtual machines. If the source MAC address is not recognized by the router, the traffic will be flagged as "Abuse" and might lead to the server being blocked. Therefore, it is essential to request virtual MAC addresses in the Robot Panel.

-

Host system Bridged

We configure only the main IP of the server here. The additional IPs will be configured in the Guest Systems.

# /etc/network/interfaces auto lo iface lo inet loopback auto enp0s31f6 iface enp0s31f6 inet manual auto vmbr0 iface vmbr0 inet static address 198.51.100.10/32 # Main IP gateway 198.51.100.1 # Gateway bridge-ports enp0s31f6 bridge-stp off bridge-fd 0

-

Guest system Bridged (Debian 12)

Here we use the gateway of the additional IP, or if the additional IP is within the same subnet as the main IP, we use the gateway of the main IP.

Static configuration:

# /etc/network/interfaces auto ens18 iface ens18 inet static address 192.0.2.20/32 # Additional IP gateway 192.0.2.1 # Additional Gateway IPIn bridged mode, DHCP can also be used to automatically configure the network settings. However, it is essential to configure the virtual machine to use the virtual MAC address obtained from the Robot Panel for the specific IP configuration.

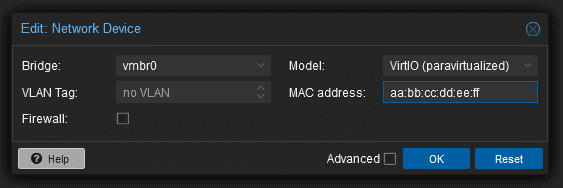

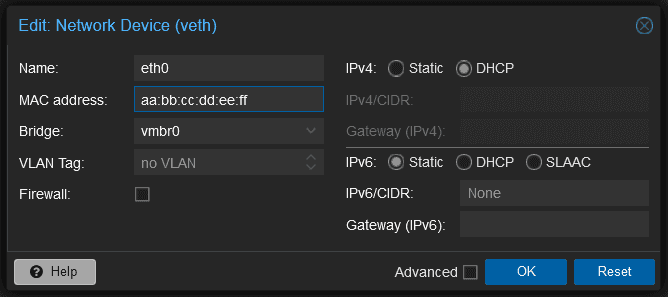

You can also adjust this manually in the Virtual Machine itself, in

/etc/network/interfaces:# /etc/network/interfaces auto lo iface lo inet loopback auto ens18 iface ens18 inet dhcp hwaddress ether aa:bb:cc:dd:ee:ff # The MAC address is just an exampleYou can do the same thing for LXC containers via the Proxmox GUI. Simply click on the container, navigate to "Network", and then click on the bridge. Select DHCP and add the correct MAC address from the Robot Panel (in our case the example would be

aa:bb:cc:dd:ee:ff):

2.3 vSwitch with a public subnet

Proxmox can also be set up to link directly with a Hetzner vSwitch that manages routing for a public subnet, allowing IP addresses from that subnet to be assigned directly to VM's and containers. The setup has to be a bridged setup and a virtual interface has to be created in order for the packets to be able to reach the vSwitch. The bridge does not require to be VLAN-aware and no VLAN configuration has to be made within the VM or LXC containers, the tagging is here done by the subinterface, in our example the enp0s31f6.4009. Every packet that goes through the interface will be tagged with the appropriate VLAN ID. (Please note that this configuration is meant for the LXC/VM's, If you would like the host itself to be able to communicate with the vSwitch you will have to create an additional Routing table.) In this case we will use 203.0.113.0/24 as an example subnet.

- Host system configuration:

# /etc/network/interfaces auto enp0s31f6.4009 iface enp0s31f6.4009 inet manual auto vmbr4009 iface vmbr4009 inet static bridge-ports enp0s31f6.4009 bridge-stp off bridge-fd 0 mtu 1400 #vSwitch Subnet 203.0.113.0/24

- Guest system configuration:

# /etc/network/interfaces auto lo iface lo inet loopback auto ens18 iface ens18 inet static address 203.0.113.2/24 # Subnet IP from the vSwitch gateway 203.0.113.1 # vSwitch Gateway

2.4 Hetzner Cloud Network

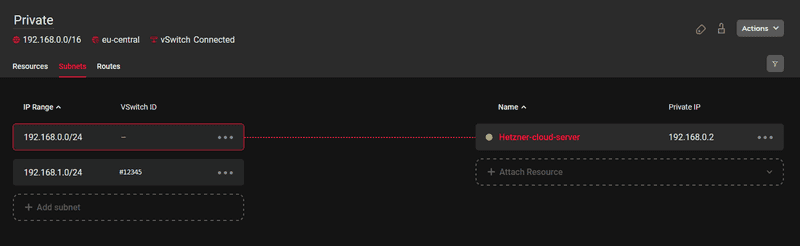

It is also possible to establish a connection between the virtual machines/LXC in Proxmox with the Hetzner Cloud Network. For the sake of this example, let's assume that you have already setup your Cloud Network and have added the vSwitch with the following configuration:

192.168.0.0/16- Your cloud network (parent network)192.168.0.0/24- Cloud server subnet192.168.1.0/24- vSwitch (#12345)

The configuration should look something like this:

Similarly as in the example before, we will first create a virtual interface and define the VLAN ID, so in our case that would be the enp0s31f6.4000. We will have to add the route to the Cloud Network 192.168.0.0/16 via the vSwitch. Please note that adding a route to the Hetzner Cloud network and assigning an IP address from the private subnet range of the vSwitch to the bridge is only necessary if the Proxmox host itself is to communicate with the Hetzner Cloud network.

- Host system configuration:

# /etc/network/interfaces auto enp0s31f6.4000 iface enp0s31f6.4000 inet manual auto vmbr4000 iface vmbr4000 inet static address 192.168.1.10/24 bridge-ports enp0s31f6.4000 bridge-stp off bridge-fd 0 mtu 1400 post-up ip route add 192.168.0.0/16 via 192.168.1.1 dev vmbr4000 #vSwitch-to-cloud Private Subnet 192.168.1.0/24

- Guest system configuration

# /etc/network/interfaces auto lo iface lo inet loopback auto ens18 iface ens18 inet static address 192.168.1.2/24 gateway 192.168.1.1

2.5 Masquerading (NAT)

Exposing the virtual machines/LXC containers to the internet is also possible without configuring/having any further public additional IP addresses. Hetzner has a strict IP/MAC binding which means that if the traffic is not routed properly, it will cause abuse and can lead to server blockings. In order to avoid this problem, we can route the traffic from the LXC/VM's through the main interface of the host. This ensures that the MAC address is the same across all network packets. Masquerading enables virtual machines with private IP addresses to have internet access through the host's public IP address for outbound communications. Iptables modifies each outgoing data packet to seem as if it is coming from the host and incoming replies are adjusted so they can be directed back to the initial sender.

# /etc/network/interfaces

auto lo

iface lo inet loopback

iface lo inet6 loopback

auto enp0s31f6

iface enp0s31f6 inet static

address 198.51.100.10/24

gateway 198.51.100.1

post-up echo 1 > /proc/sys/net/ipv4/ip_forward

#post-up iptables -t nat -A PREROUTING -i enp0s31f6 -p tcp -m multiport ! --dports 22,8006 -j DNAT --to 172.16.16.2

#post-down iptables -t nat -D PREROUTING -i enp0s31f6 -p tcp -m multiport ! --dports 22,8006 -j DNAT --to 172.16.16.2

auto vmbr4

iface vmbr4 inet static

address 172.16.16.1/24

bridge-ports none

bridge-stp off

bridge-fd 0

post-up iptables -t nat -A POSTROUTING -s '172.16.16.0/24' -o enp0s31f6 -j MASQUERADE

post-down iptables -t nat -D POSTROUTING -s '172.16.16.0/24' -o enp0s31f6 -j MASQUERADE

#NAT/MasqPlease note that these rules (bellow) are not necessary for the LXC/VM's to have internet access. This rule is optional and serves the purpose of external accessibility to a specific VM/Container. It redirects all incoming traffic, besides on ports 22 and 8006 (here, 22 is excluded so that you can still connect to Proxmox via SSH, and 8006 is the port for the web interface), to a designated virtual machine at 172.16.16.2 within the subnet. This is a common scenario/setup for router VM's like pfSense where all incoming traffic will be redirected to the router VM and then routed accordingly.

post-up iptables -t nat -A PREROUTING -i enp0s31f6 -p tcp -m multiport ! --dports 22,8006 -j DNAT --to 172.16.16.2

post-down iptables -t nat -D PREROUTING -i enp0s31f6 -p tcp -m multiport ! --dports 22,8006 -j DNAT --to 172.16.16.2Step 3 - Security

The web interface is protected by two different authentication methods:

- Proxmox VE standard authentication (Proxmox proprietary authentication)

- Linux PAM standard authentication

Nevertheless, additional protection measures would be recommended to protect against the exploitation of any security vulnerabilities or various other attacks.

Here are several possibilities:

Conclusion

By now, you should have installed and configured Proxmox VE as a virtualization platform on your server.

Proxmox VE also supports clustering. Please find details in tutorial "Setting up your own public cloud with Proxmox on Hetzner bare metal".