Introduction

This tutorial explains how to set up DeepSeek (a large language model) using Ollama, an amazing AI management tool which makes running AI models more convenient then ever. This tutorial also provides some background information about reasoning models and explains how they work.

Prerequisites

-

One server with Ubuntu/Debian

- You need access to the root user or a user with sudo permissions.

- Before you start, you should complete some basic configuration, including a firewall.

-

Minimum hardware requirements

- CPU: Ideally an Intel/AMD CPU that supports AVX512 or DDR5 — but not required. To check, you can run:

lscpu | grep -o 'avx512' && sudo dmidecode -t memory | grep -i "DDR5" - RAM: 16GB

- Disk space: about 50GB

- GPU: Recommended

- CPU: Ideally an Intel/AMD CPU that supports AVX512 or DDR5 — but not required. To check, you can run:

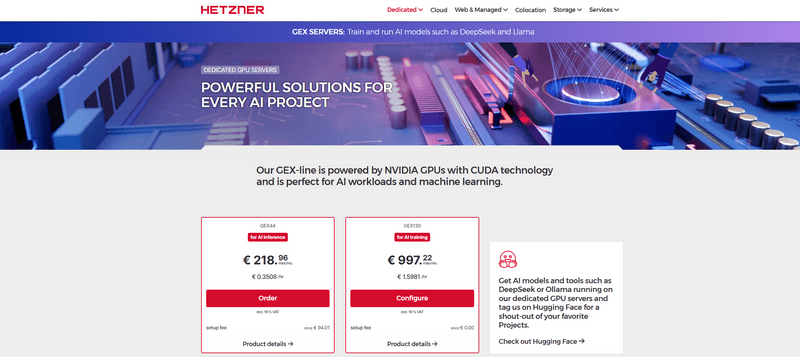

The tutorial was tested on a Hetzner server of the GEX series with a CUDA-enabled GPU to run Ollama efficiently. We recommend selecting a Linux distribution.

Step 1 - Concept and use-cases of reasoning models

Like you are most likely aware already, DeepSeek R1 is a reasoning AI model which is an advanced type of artificial intelligence designed to perform tasks that require logical, analytical, and contextual understanding, mimicking human-like cognitive processes such as problem-solving, deduction, inference, and decision-making. Unlike traditional AI systems that rely solely on pattern recognition or rule-based operations, a reasoning AI model incorporates complex cognitive capabilities to understand context, draw conclusions from incomplete information, and make decisions based on logical deductions.

-

How the model is trained:

A key aspect of reasoning models is distillation. Instead of storing vast amounts of raw information, distillation trains the model to replicate the reasoning and outputs of more powerful LLMs across a wide range of questions and scenarios. This enables DeepSeek to deliver accurate answers while maintaining a significantly smaller size. As a result, advanced AI becomes more accessible to smaller developers and hobbyists.

Traditional large models are often limited by the datasets they were trained on. Reasoning models, however, learn from the outputs of multiple LLMs. By distilling knowledge from diverse sources, DeepSeek benefits from a broader range of information.

-

Scenarios in which the model is used:

Reasoning models focus on processing information to make logical deductions and solve complex problems. They are useful in applications requiring decision-making based on data. If you provide some information, the model can use it to infer new knowledge or solve problems in a structured way.

-

How the model responds:

When you ask a question, the model will return an output that consists of two sections:

Section Description Chain of Thought The model "thinks" through the problem. It performs a step-by-step analysis, lays out intermediate steps, and explains its reasoning process. Answer The final result or conclusion derived from the reasoning process. In the terminal, it will look like this:

<think> Okay, so I need to ... </think> In conclusion, you ...

Step 2 - Install Ollama

We will take advantage of Ollama. For a quick start, you can use the installation script:

If you want to install Ollama manually, you can follow step 1 the tutorial "Hosting an AI chatbot with Ollama and Open WebUI".

curl -fsSL https://ollama.com/install.sh | shThis will start the installation onto your machine. Once it is completed, you are ready to go.

Step 3 - Run DeepSeek

In the terminal, you can now start any AI model available with Ollama (see the list of available language models). Here, we will showcase the open-source reasoning model DeepSeek R1. Ollama provides all available model sizes (1.5b, 7b, 8b, 14b, 32b, 70b and 671b parameters). With the Hetzner server GEX130, you can run up to a 70b model with quantization. On the Hetzner server GEX44, we recommend starting with the 7b model and scaling up as needed.

ollama run deepseek-r1:7bYou can now start asking questions. In some cases, the model may randomly include Chinese text in its responses. This is a known issue. If this happens, try adding "answer in English" to your request.

Step 4 - Use DeepSeek

Below shows two examples — one decision-making request, one coding request.

-

Reasoning example

Let's ask the following question:

What is better — a reasoning model or a language model?

DeepSeek R1 will return something that looks like this:

<think> Alright, so I’m trying to figure out whether a reasoning model is better than a language model or vice versa. First off, I need to understand what each of these actually does. A **language model** seems pretty straightforward—it's about understanding and generating human language. So if I were to ask it to write a essay or translate something, it would do that for me. It probably learns from vast amounts of text data to get better at predicting the next word or sentence. On the other hand, a **reasoning model** sounds more complex. From what I gather, reasoning models aren't just about language; they also process information and make logical deductions based on that information. If you gave it some facts, it could use those to infer new knowledge or solve problems in a structured way. So comparing the two, it seems like a language model is specialized for handling linguistic tasks, while a reasoning model handles more cognitive tasks involving logic and inference. But I’m not sure if one is inherently better than the other—it probably depends on what you need for your task. Maybe it's about knowing how they complement each other. Like in real-world scenarios, you often need both language to communicate and reasoning to understand context or make decisions based on information. So perhaps using them together provides a more comprehensive solution. I also wonder if there are situations where one outperforms the other exclusively. For example, if I'm building an app that needs to have deep conversations with users (like chatbots), a language model would be essential for making the interactions natural and coherent. But if the app needs to understand complex data or solve intricate problems based on that data, then maybe a reasoning model would add more value. Another thought is about their applications. Language models are widely used in areas like NLP tasks (Natural Language Processing), text generation, translation, etc., because they're so fundamental to how we communicate. Reasoning models might be more niche but crucial for specialized AI systems that need to perform logical reasoning, such as search engines, expert systems, or autonomous systems. I also think about the advancements in each area. Language models have seen huge improvements with things like transformer architectures (e.g., BERT, GPT), making them more accurate and versatile. Reasoning models might benefit from advancements in logic-based AI or probabilistic reasoning, but they could be slower or less accessible to develop compared to language models. Moreover, there's the aspect of integration. Combining both capabilities into a single model might offer more flexibility and robustness for complex tasks. However, this also adds complexity and computational resources, which isn't always feasible. In summary, it seems like neither is universally better than the other. It really depends on what you're trying to achieve—whether it's handling language-based tasks with a language model or tackling cognitive reasoning tasks with a reasoning model. </think> In conclusion, whether a **reasoning model** or a **language model** is "better" depends entirely on the specific task or application at hand. Here's a summary of the key points: 1. **Language Models**: These are specialized for understanding and generating human language. They excel in tasks such as text generation, translation, summarization, and conversational AI (e.g., chatbots). Advances like transformer architectures (e.g., BERT, GPT) have significantly enhanced their capabilities. 2. **Reasoning Models**: These focus on processing information to make logical deductions and solve complex problems. They are useful in applications requiring decision-making based on data, such as expert systems or autonomous systems. 3. **Complementary Use**: Often, the best solutions integrate both approaches. Language models handle communication, while reasoning models provide cognitive capabilities. This combination can lead to more comprehensive AI systems tailored to specific needs.In this reasoning example, you can see that the model starts by defining both options before making any comparisons or judgments. It examines their strengths and avoids making premature conclusions by exploring various perspectives and scenarios.

-

Coding Example

Let's ask the following question:

How does a simple Python script look like that returns "Hello World!".

DeepSeek R1 will return something that looks like this:

<think> Okay, so I need to write a simple Python script that outputs "Hello World!". Hmm, let me think about how to do this. I remember from my classes that in Python, you can print messages using the print() function. So maybe it's as simple as writing something like print("Hello World!"). But wait, is there more to it? I guess I should make sure that the string inside the print function is exactly "Hello World!". Let me check if I need quotes around each letter or not. No, in Python, you can just put the entire string inside the quotes and pass it directly to print(). So writing print("Hello World!") should do it. Wait, are there any other requirements? The user asked for a simple script that returns "Hello World!", so maybe they just want the code without any extra explanations or error handling. That makes sense because adding too much might complicate things when someone is just starting out. I should also consider if I need to run it in a specific environment, but since it's Python, as long as the syntax is correct, it should work on most setups. Maybe the user doesn't need to worry about that for such a simple script. Putting it all together, the script would be just one line: print("Hello World!"). I don't think there are any imports needed here because this is a straightforward print statement without any functions or classes being called from libraries. So that should do it. </think> Here's a simple Python script that outputs "Hello World!": ```python print("Hello World!") ``` When you run this script, it will display the text "Hello World!" in the console.In this coding example, you can see that the model already knows the answer within the first couple of sentences. Instead of responding immediately, it questions its own reasoning and explores if it missed anything or made any other mistakes. It also analyses different scenarios and environments before it actually responds.

Conclusion

In this tutorial, you learned a little bit about the technical concepts of DeepSeek and how to use it. We recommend trying around with different parameter sizes of DeepSeek R1 and also with all sorts of AI models available on ollama.com/search. The ollama run <model_name> command is the same for all Ollama-based models.

For some inspiration on how to make the usability more convenient, we recommend this in depth tutorial on how to setup Open WebUI along with AI Models.