Introduction

We all know how much of a headache installing Kubernetes manually is, especially scaling it as your workload grows. Yet, in the realm of modern containerized applications, Kubernetes remains the undisputed champion in orchestrating workloads efficiently. Fortunately, there's a light at the end of the tunnel. In this article, we're diving headfirst into the process of building your own scalable Kubernetes cluster on Hetzner Cloud with Terraform.

This article covers how to create, scale, access, and destroy your own cluster, and how to calculate the costs of running the cluster.

Prerequisites

- Hetzner API token in the Hetzner Console

- SSH key

- Your own SSH key to access the nodes.

- A new SSH key for the worker nodes. You can use one SSH key for all worker nodes.

- Basic knowledge of Docker and Kubernetes

- Terraform installed on your local machine

Terminology

- Hetzner Cloud:

hcloud - Hetzner Console API token:

<your_api_token> nodeis a fancy word for a server- Your own SSH key

- Private:

<your_private_ssh_key> - Public:

<your_public_ssh_key>

- Private:

- New SSH key for worker nodes

- Private:

<worker_private_ssh_key> - Public:

<worker_public_ssh_key>

- Private:

Step 1 - Create the project

Create a new folder on your local machine. You can name it however you want. Here we'll go with kubernetes-cluster.

mkdir kubernetes-cluster && cd kubernetes-clusterIn that folder, create two files named main.tf and .tfvars.

touch main.tf && touch .tfvarsmain.tfwill be used to declare hcloud terraform resources..tfvarswill be used to configure the hcloud provider with your API token and hide it at the same time.

Step 1.1 - Setup the hcloud provider

After creating the two files, add the following to the main.tf file:

You can view the latest version of

hetznercloud/hcloudin the Terraform Registry.

# Tell Terraform to include the hcloud provider

terraform {

required_providers {

hcloud = {

source = "hetznercloud/hcloud"

# Here we use version 1.56.0, this may change in the future

version = "1.56.0"

}

}

}

# Declare the hcloud_token variable from .tfvars

variable "hcloud_token" {

sensitive = true # Requires terraform >= 0.14

}

# Configure the Hetzner Cloud Provider with your token

provider "hcloud" {

token = var.hcloud_token

}Right now, this will fail to initialize the hcloud provider because the variable hcloud_token has not yet been declared

in .tfvars. To fix this, add the following line to the .tfvars file:

Replace

<your_api_token>with your actual Hetzner Cloud API token.

hcloud_token = "<your_api_token>"Just like a .env file, you can use the .tfvars file to initialize secrets and global variables that you don't want in

your code.

Step 1.2 Initialize the project

Lastly, run the following command to initialize the project and download the hcloud provider. Before you run the command, make sure Terraform is installed as mentioned in the prerequisites.

terraform initThe output log should be similar to this:

Initializing the backend...

Initializing provider plugins...

- Finding hetznercloud/hcloud versions matching "1.50.0"...

- Installing hetznercloud/hcloud v1.50.0...

- Installed hetznercloud/hcloud v1.50.0 (signed by a HashiCorp partner, key ID 5219EACB3A77198B)

Terraform has been successfully initialized!Step 2 - Setup your master node

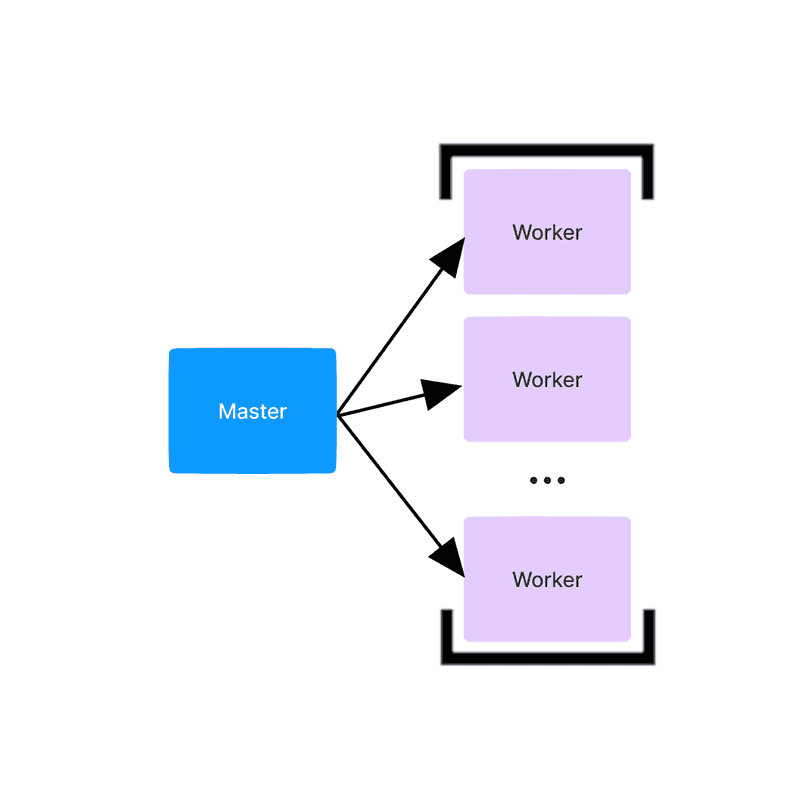

Now that Terraform is ready for hcloud, we need to create the most important part of the cluster, the master node.

The master node controls all of the other worker nodes, it's the brain of the cluster.

Step 2.1 - Create a private network for the cluster

Later on, you'll want to add more nodes — worker nodes. The master node and the worker nodes need to communicate without being exposed to the public. For this, they need to be in a private network.

Add the following code to your main.tf file:

resource "hcloud_network" "private_network" {

name = "kubernetes-cluster"

ip_range = "10.0.0.0/16"

}

resource "hcloud_network_subnet" "private_network_subnet" {

type = "cloud"

network_id = hcloud_network.private_network.id

network_zone = "eu-central"

ip_range = "10.0.1.0/24"

}

output "network_id" {

value = hcloud_network.private_network.id

}This will create a private network named kubernetes-cluster that will be used by the nodes to communicate with each

other.

Step 2.2 Create the master node

When creating a node on hcloud, you can add what is called a cloud-init script to it. This script is executed

when the node is created, and you can use it to install software, configure the node, and more. In this case, we'll use

it to install Kubernetes.

Step 6 explains how to create an example deployment with the Hetzner Cloud Controller Manager. For this to work, you have to disable the K3s CCM by adding the flags --disable-cloud-controller and --kubelet-arg cloud-provider=external in the cloud-init script.

Create cloud-init.yaml and add the following content:

Replace

<your_public_ssh_key>with the public SSH key of your local machine and<worker_public_ssh_key>with the public SSH key for your worker node(s).

#cloud-config

packages:

- curl

users:

- name: cluster

ssh-authorized-keys:

- <your-ssh-public-key>

- <worker_public_ssh_key>

sudo: ALL=(ALL) NOPASSWD:ALL

shell: /bin/bash

runcmd:

- apt-get update -y

- curl https://get.k3s.io | INSTALL_K3S_EXEC="--disable traefik --disable-cloud-controller --kubelet-arg cloud-provider=external" sh -

- chown cluster:cluster /etc/rancher/k3s/k3s.yaml

- chown cluster:cluster /var/lib/rancher/k3s/server/node-tokenYou need to add the public SSH key for the worker node so that the worker node can copy the Kubernetes token from the master node.

You might have noticed that instead of installing k8s the traditional way, we're using k3s. This is because k3s is a

lightweight k8s distribution that makes it way easier to install and manage k8s clusters. This tutorial would be way too

long if we were to install k8s the traditional way.

Also, we're disabling traefik because we'll be using nginx as an ingress controller for later on.

Before we continue, you must choose a plan for your master node. You can find the available

plans here. Because k3s is optimized for Arm64 architecture, we'll go with the cax11 plan

from hcloud's Arm64 instances.

| Plan | CPU | Memory | Disk |

|---|---|---|---|

cax11 |

2 | 4GB | 40GB |

Add the following code to your main.tf file:

Replace

cax11with the plan you would like to use.

resource "hcloud_server" "master-node" {

name = "master-node"

image = "ubuntu-24.04"

server_type = "cax11"

location = "fsn1"

public_net {

ipv4_enabled = true

ipv6_enabled = true

}

network {

network_id = hcloud_network.private_network.id

# IP Used by the master node, needs to be static

# Here the worker nodes will use 10.0.1.1 to communicate with the master node

ip = "10.0.1.1"

}

user_data = file("${path.module}/cloud-init.yaml")

# If we don't specify this, Terraform will create the resources in parallel

# We want this node to be created after the private network is created

depends_on = [hcloud_network_subnet.private_network_subnet]

}Step 3 - Setup your worker nodes

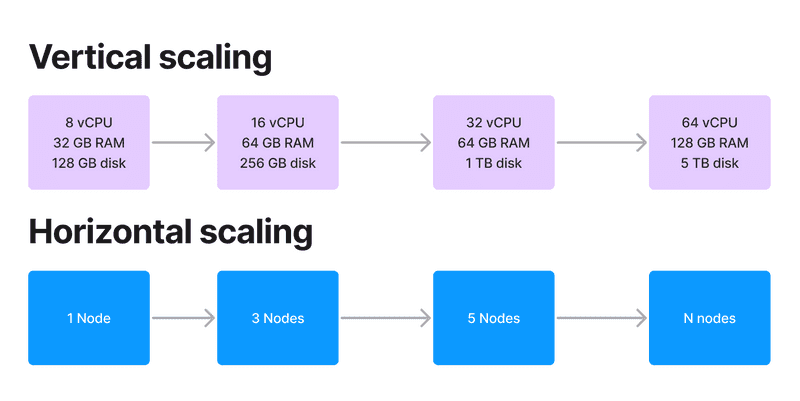

Now that the master node is ready, we need to give it some more juice. While you can scale the master node by giving it more CPU's and memory, eventually you'll hit a limit (this is called vertical scaling). This is where worker nodes come in. You can have an unlimited amount of worker nodes (this is called horizontal scaling).

The cloud-init-worker.yaml file will be similar to the cloud-init.yaml file, but it will not install k3s the same

way. Instead, it will join the worker node to the master node with a command similar to this:

curl -sfL https://get.k3s.io | K3S_URL=https://10.0.1.1:6443 K3S_TOKEN=<will_be_diffferent_every_time> sh -Step 6 explains how to create an example deployment with the Hetzner Cloud Controller Manager. For this to work, you have to add the flag --kubelet-arg cloud-provider=external in the cloud-init script.

Create cloud-init-worker.yaml and add the following content:

Replace

<your_public_ssh_key>with the public SSH key of your local machine and<worker_private_ssh_key>with the private SSH key for your worker node(s).

#cloud-config

packages:

- curl

users:

- name: cluster

ssh-authorized-keys:

- <your_public_ssh_key>

sudo: ALL=(ALL) NOPASSWD:ALL

shell: /bin/bash

write_files:

- path: /root/.ssh/id_rsa

content: |

-----BEGIN OPENSSH PRIVATE KEY-----

<worker_private_ssh_key>

-----END OPENSSH PRIVATE KEY-----

permissions: "0600"

runcmd:

- apt-get update -y

- # wait for the master node to be ready by trying to connect to it

- until curl -k https://10.0.1.1:6443; do sleep 5; done

- # copy the token from the master node

- REMOTE_TOKEN=$(ssh -o StrictHostKeyChecking=accept-new cluster@10.0.1.1 sudo cat /var/lib/rancher/k3s/server/node-token)

- # Install k3s worker

- curl -sfL https://get.k3s.io | K3S_URL=https://10.0.1.1:6443 K3S_TOKEN=$REMOTE_TOKEN INSTALL_K3S_EXEC="--kubelet-arg cloud-provider=external" sh -

You need to add the private SSH key for the worker node so that the worker node can copy the Kubernetes token from the master node.

Next, edit main.tf to create the worker nodes with the cloud-init script above.

You can put resources in a loop in Terraform, so you can create as many worker nodes as you want. The example below will create 3 worker nodes but you can change it to any other number. If you set the count to 1, it will create only one worker node. Add the following code to your main.tf file:

Replace

cax11with the plan you would like to use.

Replacecount = 3with however many worker nodes you want to create.

resource "hcloud_server" "worker-nodes" {

count = 3

# The name will be worker-node-0, worker-node-1, worker-node-2...

name = "worker-node-${count.index}"

image = "ubuntu-24.04"

server_type = "cax11"

location = "fsn1"

public_net {

ipv4_enabled = true

ipv6_enabled = true

}

network {

network_id = hcloud_network.private_network.id

}

user_data = file("${path.module}/cloud-init-worker.yaml")

depends_on = [hcloud_network_subnet.private_network_subnet, hcloud_server.master-node]

}Step 4 - Deploy your cluster

NOTE: Always make sure to calculate the costs of running your cluster before deploying it. You can figure out how in "Step 8".

Now that you have your master and worker nodes, you can simply run terraform apply to create the cluster.

Note that running the command below will create new servers in Hetzner Cloud that will be charged.

terraform apply -var-file .tfvarsThe output log should be similar to this:

Plan: 4 to add, 0 to change, 0 to destroy.

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value: yes

hcloud_network.private_network: Creating...

hcloud_network.private_network: Creation complete after 1s [id=REDACTED]

hcloud_network_subnet.private_network_subnet: Creating...

hcloud_network_subnet.private_network_subnet: Creation complete after 1s [id=REDACTED-10.0.1.0/24]

hcloud_server.master-node: Creating...

hcloud_server.master-node: Creation complete after 2m46s [id=REDACTED]

hcloud_server.worker-node-1: Creating...

hcloud_server.worker-node-1: Creation complete after 5m35s [id=REDACTED]

Apply complete! Resources: 4 added, 0 changed, 0 destroyed.

Outputs:

network_id = "<your_network_id>"Copy the network ID from the output and save it for later.

Step 5 - Access your cluster (Optional)

To access your cluster from your local machine, you need to have the kubectl command-line tool.

-

On macOS, you can install it with homebrew:

brew install kubectl -

On Ubuntu, you can install it with

snap:snap install kubectl --classic

After installing kubectl, you need to copy the kubeconfig file from the master node to your local machine. You can do

this by running the following command on your local machine:

mkdir ~/.kube

scp -i <your_private_ssh_key> cluster@<master_node_public_ip>:/etc/rancher/k3s/k3s.yaml ~/.kube/configMake sure that the IP in ~/.kube/config is the public IP of the master node, not 127.0.0.1.

For example:

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: ...

# NOT https://127.0.0.1:6443

server: https://<master_node_public_ip>:6443

name: default

contexts:

- context:

cluster: default

user: default

name: default

current-context: default

kind: Config

preferences: { }

users:

- name: default

user:

client-certificate-data: ...

client-key-data: ...After that, check if your cluster is up and running:

kubectl get nodesThis command should return something like this:

NAME STATUS ROLES AGE VERSION

worker-node-1 Ready <none> 90s v1.33.5+k3s1

master-node Ready control-plane,master 116s v1.33.5+k3s1Step 6 - Create an example deployment (Optional)

The example below explains how to use the Hetzner Cloud Controller to host an example website via a Hetzner Cloud Load Balancer (see Hetzner Cloud Controller Manager documentation: Reference existing Load Balancers).

First, install some prerequisites:

-

Nginx Ingress Controller as a DaemonSet:

wget -q https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.13.0/deploy/static/provider/cloud/deploy.yaml -O /tmp/nginx.yamlNow edit the

/tmp/nginx.yamlfile.sed -i -e "s/kind: Deployment/kind: DaemonSet/g" /tmp/nginx.yaml sed -i -e "s/data: null/data:/g" /tmp/nginx.yaml sed -i -e '/^kind: ConfigMap.*/i \ \ compute-full-forwarded-for: \"true\"\n \ use-forwarded-headers: \"true\"\n \ use-proxy-protocol: \"true\"' /tmp/nginx.yaml sed -i -e "s/strategy:/updateStrategy:/g" /tmp/nginx.yamlClick here to see what the `sed` commands do

-

Replace

kind: Deploymentwithkind: DaemonSet--- apiVersion: apps/v1 kind: DaemonSet metadata: labels: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx [...] -

In

kind: ConfigMap, add three lines belowdata:--- apiVersion: v1 data: compute-full-forwarded-for: "true" use-forwarded-headers: "true" use-proxy-protocol: "true" kind: ConfigMap [...] -

Replace

strategy:withupdateStrategy:updateStrategy: rollingUpdate: maxUnavailable: 1 type: RollingUpdate

Now apply the file:

kubectl apply -f /tmp/nginx.yaml -

-

Hetzner Cloud Controller and API token

Replace

<your_api_token>with your own Hetzner API token, and<network_id>with the network ID you copied from the output in step 4.kubectl -n kube-system apply -f https://github.com/hetznercloud/hcloud-cloud-controller-manager/releases/download/v1.26.0/ccm-networks.yaml kubectl -n kube-system create secret generic hcloud \ --from-literal=token=<your_api_token> \ --from-literal=network=<network_id>

Now check if your nodes have the correct provider ID:

kubectl describe node master-node | grep "ProviderID"

kubectl describe node worker-node-0 | grep "ProviderID"The output should be hcloud://<id>

Now create the service:

nano example-service.yamlAdd this content:

apiVersion: apps/v1

kind: Deployment

metadata:

name: simple-web

namespace: ingress-nginx

spec:

replicas: 3

selector:

matchLabels:

app: ingress-nginx

template:

metadata:

labels:

app: ingress-nginx

spec:

containers:

- name: simple-web

image: httpd:latest

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: ingress-nginx-controller

namespace: ingress-nginx

annotations:

load-balancer.hetzner.cloud/name: "kubernetes-load-balancer"

load-balancer.hetzner.cloud/location: "fsn1"

load-balancer.hetzner.cloud/use-private-ip: "true"

# If you have a public domain and a SSL certificate, upload the

# SSL certificate in Hetzner Console and uncomment the lines below.

#load-balancer.hetzner.cloud/protocol: "https"

#load-balancer.hetzner.cloud/http-redirect-http: "true"

#load-balancer.hetzner.cloud/http-certificates: "certificate-name"

spec:

type: LoadBalancer

selector:

app: ingress-nginx

ports:

- protocol: TCP

# If you have a public domain and a SSL certificate,

# use port 443 instead of port 80.

port: 80

targetPort: 80Next, apply the file and view the pods and the deployment. Note that applying the file will automatically create a new Load Balancer in your Hetzner Cloud account.

kubectl apply -f example-service.yaml

kubectl -n ingress-nginx get pods

kubectl -n ingress-nginx get deployments

kubectl -n ingress-nginx get svcIn Hetzner Console, you should now see the Load Balancer. Give it some time to create the service and add the targets. If you added a TLS/SSL certificate, create a new A record that points your domain at the IP address of the new Load Balancer.

To stop the deployment and delete the Hetzner Cloud Load Balancer, you can run:

kubectl -n ingress-nginx delete deployment simple-web

kubectl -n ingress-nginx delete service ingress-nginx-controllerStep 7 - Destroy your cluster (Optional)

When you're done with your cluster, don't forget to destroy it to avoid being billed for resources you're not using.

Run the following command in the kubernetes-cluster directory on your local machine:

terraform destroy -var-file .tfvarsThe output log should be similar to this:

Plan: 0 to add, 0 to change, 4 to destroy.

Do you really want to destroy all resources?

Terraform will destroy all your managed infrastructure, as shown above.

There is no undo. Only 'yes' will be accepted to confirm.

Enter a value: yes

hcloud_server.worker-node-1: Destroying... [id=REDACTED]

hcloud_server.worker-node-1: Destruction complete after 1m0s

hcloud_server.master-node: Destroying... [id=REDACTED]

hcloud_server.master-node: Destruction complete after 1m0s

hcloud_network_subnet.private_network_subnet: Destroying... [id=REDACTED-

hcloud_network_subnet.private_network_subnet: Destruction complete after 1s

hcloud_network.private_network: DestroyStep 8 - Calculate the costs of running the cluster

Before deploying, it's important to think about the cost of the cluster. This will help you avoid any surprises when you get your bill at the end of the month. The cost will depend on the following factors:

- The number of worker nodes

- The plan of the master and worker nodes

You can find a full list of Arm64 plans offered by Hetzner here.

You would usually want to pick the same plan for the master and worker nodes. This is because the master node also acts as a worker.

For example, let's pick the cax11 plan for the master and worker nodes.

At the time of writing, this plan costs 0.006€/hour or 3.95€/month.

If you have 1 master node and 3 worker nodes, you would calculate it like this:

-

The hourly cost would be:

0.006 + (0.006 * 3) = 0.024€ / hour -

The monthly cost would be:

3.95 + (3.95 * 3) = 15.80€ / month

You can replace the numbers with variables:

price_master + (price_worker * number_of_workers) = total_priceConclusion

Congratulations! You've just created your own scalable Kubernetes cluster on Hetzner Cloud. You can now deploy your applications and scale them as you see fit. If you want to learn more about Kubernetes, you can check out the official documentation.