Introduction

In this tutorial, you will learn how to install an ELK stack using Docker Compose on a server with Ubuntu (version 22.04). The ELK stack is comprised of Elasticsearch, Kibana, and Logstash.

- Elasticsearch is a search and analytics engine.

- Kibana is a user interface for data analysis.

- Logstash can analyse logs from applications.

Prerequisites

- A server running Ubuntu version 22.04 or later

- SSH access to that server

- Access to the root user or a user with sudo permissions

- Basic knowledge of Docker, Docker Compose, ElasticSearch and YAML

Example terminology

- Username:

holu - Hostname:

<your_host>

Step 1 - Install Docker Compose

You may skip this step if you have already installed Docker Compose on your server. First, SSH into your server using the following command:

Replace

holuwith your own username and<your_host>with the IP of your server.

ssh holu@<your_host>Make sure to update apt packages and install cURL:

sudo apt-get update && sudo apt-get install curl -yAfter making sure curl is installed, we can use the quick install script provided by Docker to install Docker as well as Docker Compose:

curl https://get.docker.com | shThis command will download the script from get.docker.com and "pipe" it to sh (It will feed the downloaded script to sh which will execute that script and install Docker). The last thing we can do is add ourselves to the Docker group so that we don’t need to use sudo everytime we use the docker command.

Replace

holuwith your own username.

sudo usermod -aG docker holuMake sure to log out and log in again to apply changes.

Step 2 - Create the Docker containers

Step 2.1 - Create docker-compose.yaml

The docker-compose.yaml file will be used to declare all the infrastructure for the ELK stack. It is used to create

several containers with a single command.

Often, containers are not stored in the users directory but in /opt/containers.

sudo mkdir -p /opt/containers && cd /opt/containers

sudo chown -R holu /opt/containersCreate a new folder on your server and create a docker-compose.yaml file and an esdata directory in it:

mkdir elk-stack && cd elk-stack && touch docker-compose.yaml && mkdir esdata && sudo chown -R 1000:1000 esdataWe will store the elasticsearch data permanently outside the elastissearch container in the esdata directory.

We want to use Docker Compose to create three Docker containers:

To create those three containers, add the following content to the docker-compose.yaml file:

version: "3"

services:

setup:

image: docker.elastic.co/elasticsearch/elasticsearch:8.15.1

environment:

- ELASTIC_PASSWORD=${ELASTIC_PASSWORD}

- KIBANA_PASSWORD=${KIBANA_PASSWORD}

container_name: setup

command:

- bash

- -c

- |

echo "Waiting for Elasticsearch availability";

until curl -s http://elasticsearch:9200 | grep -q "missing authentication credentials"; do sleep 30; done;

echo "Setting kibana_system password";

until curl -s -X POST -u "elastic:${ELASTIC_PASSWORD}" -H "Content-Type: application/json" http://elasticsearch:9200/_security/user/kibana_system/_password -d "{\"password\":\"${KIBANA_PASSWORD}\"}" | grep -q "^{}"; do sleep 10; done;

echo "All done!";

elasticsearch:

image: docker.elastic.co/elasticsearch/elasticsearch:8.15.1

# give the container a name

# this will also set the container's hostname as elasticsearch

container_name: elasticsearch

# this will store the data permanently outside the elastissearch container

volumes:

- ./esdata:/usr/share/elasticsearch/data

# this will allow access to the content from outside the container

ports:

- 9200:9200

environment:

- discovery.type=single-node

- cluster.name=elasticsearch

- bootstrap.memory_lock=true

# limits elasticsearch to 1 GB of RAM

- ES_JAVA_OPTS=-Xms1g -Xmx1g

# The password for the 'elastic' user

- ELASTIC_PASSWORD=${ELASTIC_PASSWORD}

- xpack.security.http.ssl.enabled=false

kibana:

image: docker.elastic.co/kibana/kibana:8.15.1

container_name: kibana

ports:

- 5601:5601

environment:

# remember the container_name for elasticsearch?

# we use it here to access that container

- ELASTICSEARCH_HOSTS=http://elasticsearch:9200

- ELASTICSEARCH_USERNAME=kibana_system

- ELASTICSEARCH_PASSWORD=${KIBANA_PASSWORD}

# Change this to true if you want to sent

# telemetry data to kibana developers

- TELEMETRY_ENABLED=false

# this defines the volume to permanently store the elasticsearch container data.

volumes:

esdata:We are currently missing an element though, the .env file. The .env file is used to store secrets like passwords and API tokens to remove them from your configuration or code.

Docker Compose automatically recognizes the .env file and replaces variables like ${MY_VARIABLE} with the variable from .env.

Create .env and add the following lines:

ELASTIC_PASSWORD=<your-elastic-password>

KIBANA_PASSWORD=<your-kibana-password>Step 2.2 - Keep your installation secure before starting the containers

The ports above (9200 and 5601) will be open to the universe.

If the fulltextsearch ports are open, you'll get an email (see "CERT-Bund Reports")

To secure the docker envirenment, implement this suggestion to bind ports only to the local machine.

Edit the ports in docker-compose.yaml:

elasticsearch:

ports:

- 127.0.0.1:9200:9200

kibana:

ports:

- 127.0.0.1:5601:5601You should have ufw installed and make sure that especially the ports 9200 and 5601 are blocked:

To Action From

-- ------ ----

22 ALLOW Anywhere

80 ALLOW Anywhere

443 ALLOW Anywhere

9200 DENY Anywhere

5601 DENY Anywhere To access these services from outside, you need to setup a reverse proxy using Nginx, or similar.

Click here to view an example with Nginx

sudo apt install nginx -y sudo nano /etc/nginx/sites-available/kibanaAdd this content:

server { listen 80; server_name kibana.example.com; location / { proxy_pass http://127.0.0.1:5601; # Kibana port proxy_set_header Host $host; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header X-Forwarded-Proto $scheme; } }Start Nginx:

sudo rm -rf /etc/nginx/sites-available/default /etc/nginx/sites-enabled/default sudo ln -s /etc/nginx/sites-available/kibana /etc/nginx/sites-enabled/ sudo nginx -t sudo systemctl restart nginx sudo systemctl enable nginx

Step 2.3 - Start the containers

You can now run docker compose to start everything up:

docker compose up -dOutput:

[+] Running 3/4

⠇ Network elk-stack_default Created

:heavy_check_mark: Container kibana Started

:heavy_check_mark: Container setup Started

:heavy_check_mark: Container elasticsearch StartedYou can use the docker ps command to check if everything works as expected.

holu@<your_host>:~/elk-stack$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

<id> docker.elastic.co/kibana/kibana:8.15.1 "<command>" About a minute ago Up About a minute 0.0.0.0:5601->5601/tcp, :::5601->5601/tcp kibana

<id> docker.elastic.co/elasticsearch/elasticsearch:8.15.1 "<command>" About a minute ago Up About a minute 9200/tcp, 9300/tcp elasticsearchYou can now open Kibana in a web browser by entering <your_server>:5601 in the URL bar (or https://kibana.example.com if you have followed the security suggestions).

-

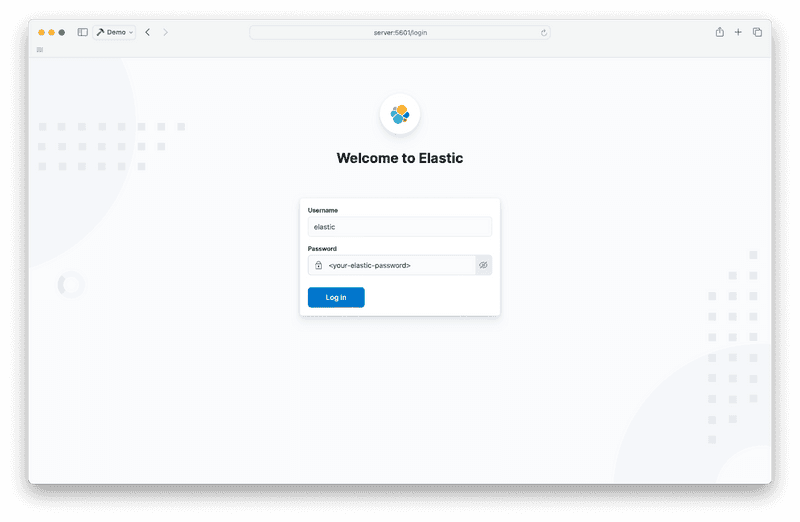

Login with the username

elasticand the password you set earlier in the.envfile.

-

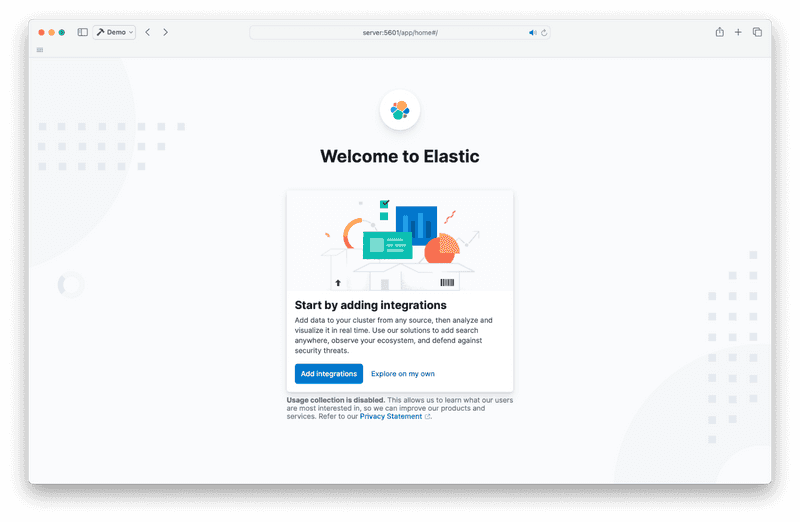

If you have this screen when logging in, click on "Explore on my own".

-

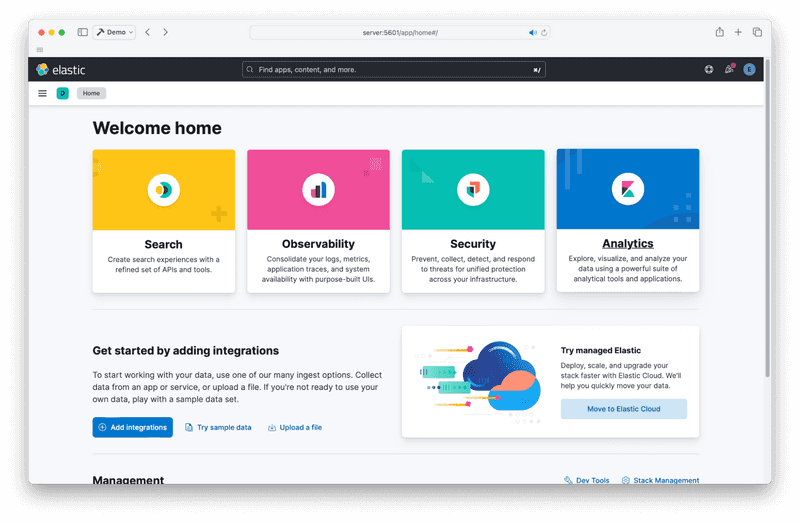

You should now be able to access the Kibana homepage. It looks like this:

Step 3 - Logstash

Now it’s time to add the final piece of the puzzle, Logstash. Logstash can analyse logs from your application(s) and it feeds the analysed logs to elasticsearch.

Edit docker-compose.yaml and add a fourth container in the "services" section below "kibana".

logstash:

image: docker.elastic.co/logstash/logstash:8.15.1

container_name: logstash

command:

- /bin/bash

- -c

- |

cp /usr/share/logstash/pipeline/logstash.yml /usr/share/logstash/config/logstash.yml

echo "Waiting for Elasticsearch availability";

until curl -s http://elasticsearch:9200 | grep -q "missing authentication credentials"; do sleep 1; done;

echo "Starting logstash";

/usr/share/logstash/bin/logstash -f /usr/share/logstash/pipeline/logstash.conf

ports:

- 5044:5044

# Secure option would be:

# - 127.0.0.1:5044:5044

environment:

- xpack.monitoring.enabled=false

- ELASTIC_USER=elastic

- ELASTIC_PASSWORD=${ELASTIC_PASSWORD}

- ELASTIC_HOSTS=http://elasticsearch:9200

volumes:

- ./logstash.conf:/usr/share/logstash/pipeline/logstash.confSetting up Logstash is a bit more complicated. You need one additional configuration file, logstash.conf. Logstash works on something called a "pipeline". It’s a file explaining what Logstash should do (where do logs come from, how to analyse the logs, where to send them). The pipeline will be in the file logstash.conf.

This is one of the most basic pipelines you could have:

input {

file {

path => "/var/log/dpkg.log"

start_position => "beginning"

}

}

filter { }

output {

elasticsearch {

hosts => "${ELASTIC_HOSTS}"

user => "elastic"

password => "${ELASTIC_PASSWORD}"

index => "logstash-%{+YYYY.MM.dd}"

}

stdout { }

}It’s pretty self explanatory. It takes a file as input (in this case /var/log/dpkg.log)

and outputs to Elasticsearch and stdout.

Put the example above in your logstash.conf file.

The elk-stack directory should now contain the following files and directories:

elk-stack/

├── .env

├── docker-compose.yaml

├── esdata/

└── logstash.confYou can now start Logstash using the following command:

docker compose up -dOutput:

[+] Running 4/4

:heavy_check_mark: Container logstash Started

:heavy_check_mark: Container setup Started

:heavy_check_mark: Container elasticsearch Running

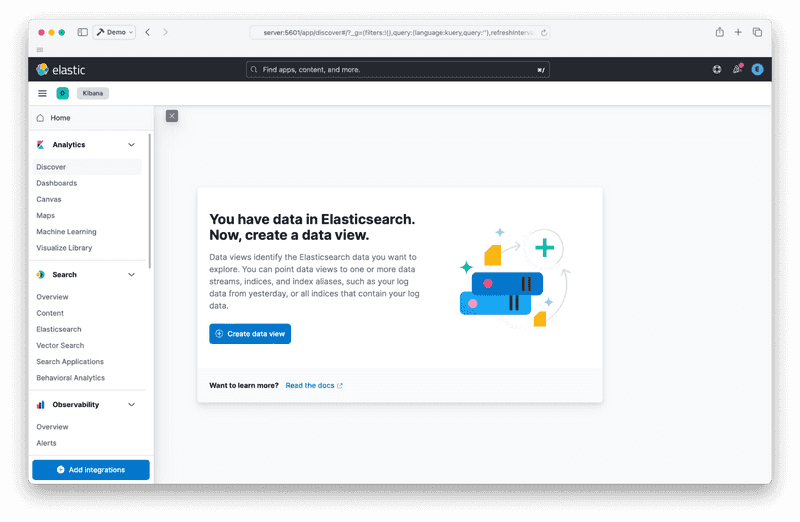

:heavy_check_mark: Container kibana RunningYou can now access Logstash from Kibana. You will need to create a logstash data view first.

-

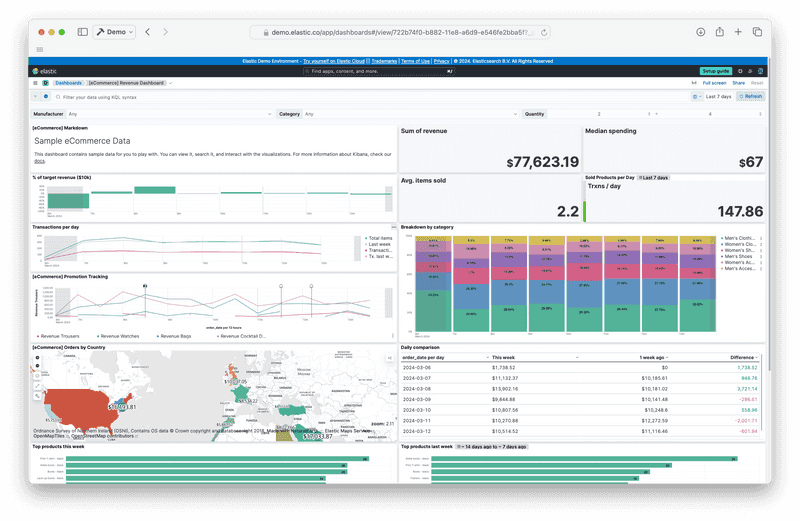

Go on the discover page of "Analytics". You should see something like this:

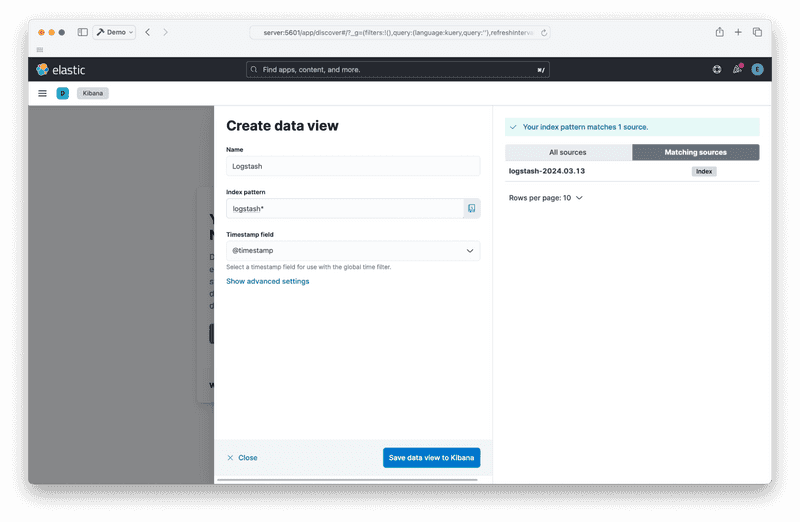

-

Create your data view by clicking on the "Create data view" button:

-

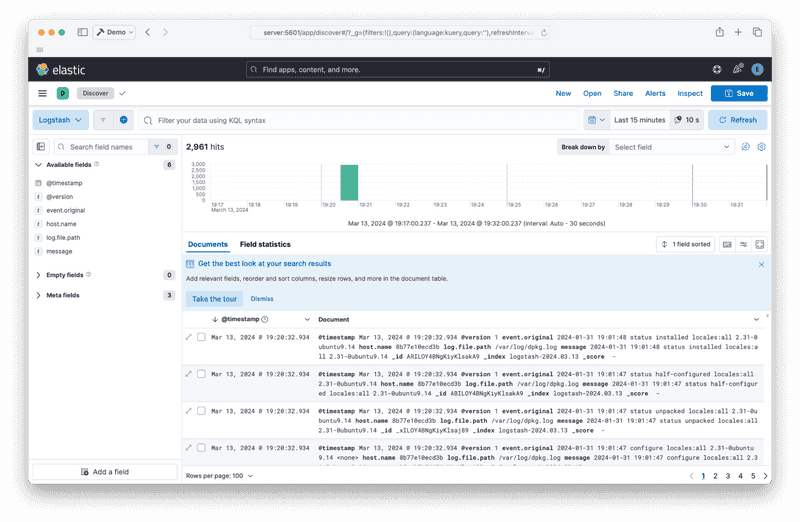

After you saved the data view, you should be able to see logs coming from Logstash:

Step 4 - Destroy the stack

Lastly, to stop the stack and remove the containers, run the following command:

docker compose downOutput:

[+] Running 5/5

:heavy_check_mark: Container logstash Removed

:heavy_check_mark: Container elasticsearch Removed

:heavy_check_mark: Container kibana Removed

:heavy_check_mark: Container setup Removed

:heavy_check_mark: Network elk-stack_default RemovedConclusion

That’s it! You should have a working ELK stack running with Docker Compose. Next steps would be to add log exporters such as Filebeat, or check out the official documentation.